Richard Newcombe, PhD.

I hold a Postdoctoral Associate position at the University of Washington, working on computer vision advised by Steve Seitz and Dieter Fox.

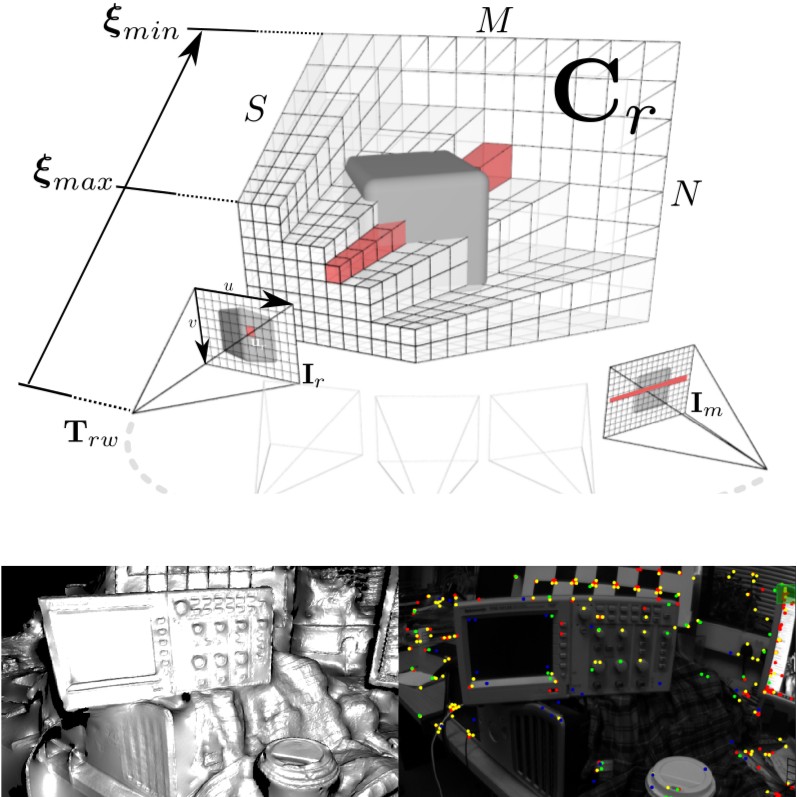

I researched Robot Vision for my PhD. with Andrew Davison and Murray Shanahan at Imperial College, London, and before that I studied with Owen Holland at the University of Essex where I received my BSc. and MSc. in robotics, machine learning and embedded systems.

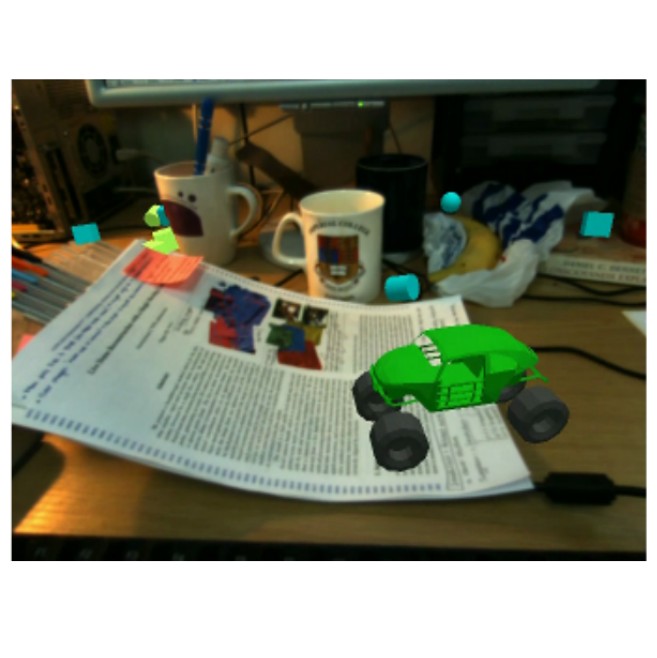

Ideas in perception, robotics, augmented realities and consciousness are what get me going everyday and I enjoy bringing new ideas that can be made to work into the world. In particular I enjoy thinking of solutions that span across hardware and algorithm spaces making use of whatever new tools, computing machinery and ways of thinking I can get access to.